In recent comment threads and after reading the ubiquitous posts on “AI” on LinkedIn, I have come to the revelation that we are living in the “era of shallow thinking”. And it is this problem that is holding us back from doing more “profound” things in computer science.

Today’s Large Language Models (LLMs) like ChatGPT remind me of “summary” books people buy that allow them to get the major points of lots of important books in their field without having to read them. Like these summary books, LLMs automatically summarize meetings, documents, or any subject you throw at it so you don’t have to be there or think or even interact with people – a remote workers dream scenario.

If your article needs to have information about a subject, instead of reading about it and studying it for yourself, you simply prompt for the information and let a generic, often corporate sounding text appear and you cut and paste it into whatever you are “supposedly” writing.

Yes, LLMs are useful. They can save time. No one is arguing that. But there is no intelligence there. Worse, there is no “humanity” there. There is nothing that makes this text yours. It is generated from other’s text making the author insignificant. It makes the text boring.

Magic

LLMs at first seems like magic. The summaries that use to be done by hand, are now automated and often do a decent job. This “seems like magic” unfortunately has led many to believe that this is “AI” instead of statistical analysis or a super autocomplete and this “magic” that enchants has created an entire industry of “exaggeration”. Artificial general intelligence is around the corner and ChatGPT27.3 will eliminate 90% of all jobs.

And when we humans are “enchanted” by “magic”, we tend to blankly stare into space waiting for the magic to happen – no participation necessary. No thinking, just ask and you shall receive. It is a fact that the more generic a text, the less likely we will remember it.

Instant Apps

This shallow thinking world is also reflected in today’s software. The desire for coming up an idea quickly, create and app, and then make millions is what many of our computer science students aspire to. There is no thought or passion for the “pain point” these students are trying to address. It’s more like a game whose objective is be clever and to make a lot of money.

We have turned from the noble profession of computer programming to become “coders” who script together existing packages and claim that computer programming itself will disappear completely and be replaced by cleverly gluing together statistical LLMs with some “logic”. This won’t work. LLMs, which are statistical, like a frog, can’t be kissed in order to magically turn into logic.

Rebellion

But people who see the hype are starting to rebel against this shallow thinking. And this group includes students. I am pleasantly surprised that the current generation of university students see this hype while the older generations do not. The older generation sees this as another way to cut costs by replacing workers while others see a way of making millions and billions by creating mega-machines that they hope will turn a frog into a prince.

Students say to me that they could join the new “AI” companies and make a lot of money for a couple of years, and then they then follow that up with having to find a “real” job when that company fails. I hear that story a lot.

This rebellious group also includes those of us who have been around a while and been in computational linguistics for decades. We have known all along that models based on statistics can be useful, but they are not in the end, intelligent. With books like “The AI Con” and “Empire of AI”, we see the folly in our current direction, and we need to step back and think of how this age of “shallow thinking” is detrimental to the human intellect.

Good for Humans?

The rebellion seems to have a common theme: is this all good for humans? Are we creating a world where we humans no longer interact, study some subject deeply, and try to produce more “deep” answers to questions allowing our own neural network brains the time to come up with “profound” ideas, and not shallow ones?

There are a few people out there talking about how “concepts” and “logic” are the key to true artificial intelligence – something that statistics cannot do. As I have repeated in many of my talks to universities, we must “give” computers linguistic and world knowledge and how to use it. Like our own children, they will not learn it on their own and finding patterns in text certainly cannot find it.

AI Is Hard

People who are rebelling are also saying this: “true” AI is hard. It will not come from large language models because mathematically, probability can never be logical and true “artificial intelligence” needs to be based on rules and logic and concepts.

Luckly, there are people out there pushing in this direction – a direction we need to head sooner than later. We need to stop producing students who are looking for a quick million solving a “pain point”. All we do is produce more summaries which we don’t read or care about and our brains start to intellectually atrophy from non-use.

New Era of Deep Thinking

The era of shallow thinking needs to end and end quickly. We need to start taking more time and dedicating ourselves to more profound endeavors – like producing “real” artificial intelligence. Something that will take time and effort and most importantly: human thought and ingenuity – not mathematical statistics.

We need to start producing students who study something deeply, allowing them to create a world that is much more stimulating and rewarding than simply feeling “instant” gratification.

Some of us are trying to do just that despite the “smearing” by those who are in the shallow end of the intellectual pool and who unfortunately, have only a “shallow” understanding of today’s technologies.

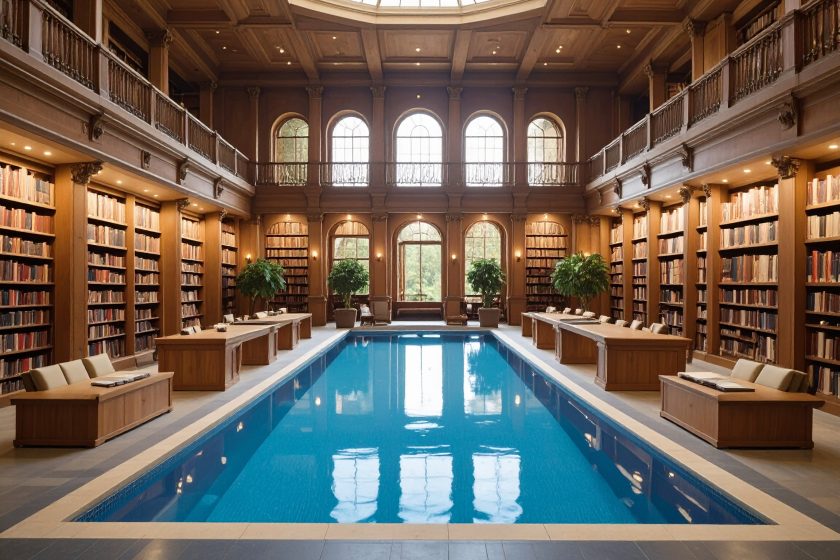

We need to be in the deep end and tread water for a long time…

About the Author

David de Hilster is a research scientist in the area of Natural Language Processing for over 40 years and is co-creator of the computer programming language NLP++. De Hilster is also an adjunct professor at Northeastern Univeristy at the Miami campus and is heading up the NLP Blockchain project whose goal is to produced logical and rule-based NLP using human ingenuity, and is currently finishing up a textbook on NLP++.

![]()