Sometime back, before all the extreme hype about what people are now calling “AI”, I saw an article about a statistical program that took thousands of photos of convicts who were considered to be extremely “non-average”, averaged them, and the result was an extremely beautiful face. That always fascinated me.

Thinking about it, the reason any face appears beautiful to us, is because our brains fire more neural connections when we see what we consider beautiful faces than for non-average faces. The more neurons that fire in our brains, the more pleasure and comfortable we feel and the more stimulating staring at them becomes.

This is because over the tens of thousands of faces we have seen over our lifetime, our neural networks average them into a face-recognition network. And faces that best fit that average fire more neurons in our brains and we fixate on them for more time. This borne out by experiments that show babies fixate more on symmetrical faces than they do on asymmetrical faces. Even with the smaller amount of faces they have seen, their brains have already averaged them into a face recognition network.

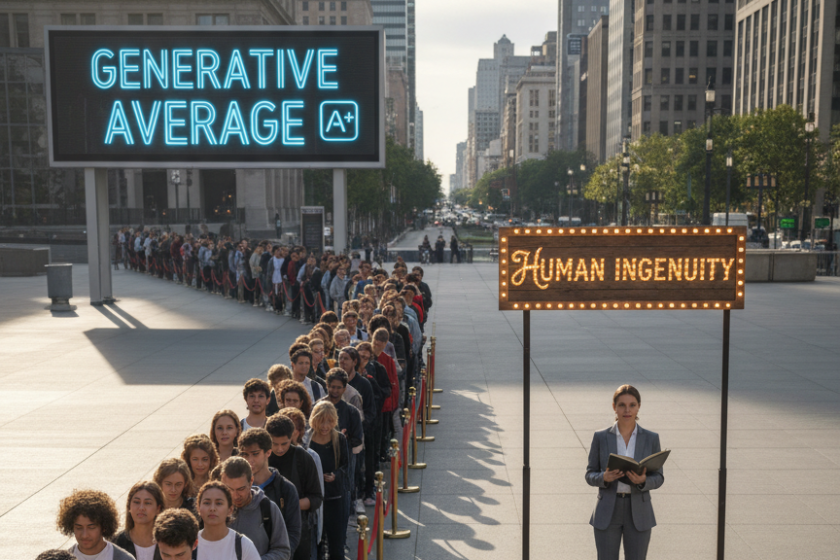

The “Average” Industry

Proof of this is that we all have noticed that visual generative programs always generate images and videos that make people look more flattering. That is because generative programs make us look more average, and more average is more stimulating and therefore more beautiful.

In fact, what we considered to be beauty, is in reality the most “average”. The same holds true about all generative programs (which I refuse to call intelligent).

Billions of dollars are made from programs that average our images. Take any photo and make any headshot. And they always look “better” than we really are and that strokes our egos. The results from these programs are a bit more average than our own face and we look better. And we ALL want to look more beautiful or handsome and people are making huge sums money off this side effect of generative image programs.

Slop Is the Average

The same can be said for text. Large language models generate average text. Sometimes that average can be beneficial to us. Being in natural language engineering for over 40 years, to me, the jury is still out where large language models will finally land. Because of their statistical nature, they can’t be placed in critical paths and humans reading text still are the norm in many critical paths in billion dollar industries today.

But the reason that so many people are being bamboozled by these village idiots that we prod with “prompts”, is because the text they generate is “average”. That is why so many people use them for summaries. Every tool we use today seems to have a button for generating summaries. It’s big business right now.

But inevitably, when we read these summaries, we realize that these hyper-autocompletes have averaged out the important and interesting nuances of a meeting. All the summaries start to look the same and it uncomfortably reveals how our corporate lives are in reality filled with repetitive tasks. It makes us depressed at times thinking about that. And these summaries have even eliminated repetitive and questionable positions in top-heavy corporations.

The word “slop” famously was chosen the “word of the year” in 2025 because it perfectly describes the averaging of everything into a common trough of undistinguishable mush.

Slop is the result of statistical programs finding the average.

Bamboozled by Average Text

Just like beautiful faces which fire more neurons in our brains, average text from large language models fire more neurons in our brains and thus make us fixate on their outputs more than canned, static text. That is why large language models are so enticing as chatbots. They speak in the most beautiful and pleasant way. They almost never err in grammar and are always polite.

When we ask questions, being polite and conversational fires more neurons in our brains and we tend to want to listen to people who are polite, much more than people yelling at us. When we interact with these chatbots, we get positive reinforcement since the prompt is in question form and the immense amount of text data that these probabilistic programs train on are from mostly polite human interactions. Because of this, we start to develop an emotional attachment to something that is completely statistical and void of intelligence.

After a while however, it starts to dawn on us that average text seems more and more emotionally “average” to us. And thus the word “slop” came into being, garnishing the coveted “word of the year” prize. We realize that generative programs generate the average not the exception or the interesting.

Slop Hypsters Keep Pushing “Average”

Because our brains are so stimulated by the average, the slop hypsters keep hyping the emotionally bamboozling average outputs as “intelligent” using the fear of out-of-control generative programs and agents as reason to keep us looking at these average outputs like babies staring at symmetrical faces. They hope our over-stimulated brains will buy into the hype that “average” is the way to super intelligence.

They need trillions in funding that hog our resources or they need butts in their seats to keep their “AI” classes or groups alive. We no longer need programmers because “Agentic AI” can write the code for us and everyone can generate a movie from a simple text prompt. Half the jobs will disappear in the next year they say. And we hear this from somebodies like Bill Gates and nobodies like Sam Altman.

But these are grand falacies.

Away from the Average

Our own neural network brains may be stimulated by the average, but intelligence in the end requires the unique, the unusual, the unexpected. The unexpected is not only why human intellect moves forward, but emotionally, it is the spice of life.

The slop hypsters keep hyping the average because of ego or dollars, but we, the “average” public, are tired of the average. We are tired of our headshots looking like everyone else’s. We are tired of our emails sounding like everyone else’s. We are tired of our computer code mimicking someone else’s that we can’t fully understand or fix.

We are tired of generative statistics which try to stimulate the most neurons in our brains instead of creating new and interesting pathways.

Average Out, Humans In

Luckily, more and more people are seeing through the “average” and starting to realize that human-generated anything is always more interesting than computer averaged everything.

Real AI is real hard to do and certainly not average. We can get there in a safe and humane way if we humans build it instead of settling on the mechanically averaged. Thank goodness there are people who are turning to humans instead of machines to write more “intelligent” programs. It is a long and hard road and will take decades, but it won’t cost trillions and waste immense amounts of resources.

It will require tens of thousands of intelligent humans building a future that is controlled and truly useful. And thank goodness, there are alternatives and more interesting paths to more intelligent programs than from the paths “most taken”.

We humans can write computer code that anticipates alternative paths from a very small set of data to do specific, useful tasks and do it with deep understanding and highly logical reasoning. Where generative programs train on huge amounts of data, humans can intelligently analyze a finite set of data and create general programs that are controlled, logical, and maintainable.

It is doable. And human ingenuity and use of the human brain is the answer – not huge “AI” (Averaging Industries) in the sky.

About the Author

David de Hilster’s is an Adjunct professor at Northeastern University in Miami and has been a natural language engineer in industrially for over 40 years. He is co-author of the programming language NLP++ and is co-founder of the NLP Foundation, putting NLP back into the hands of human programmers and computational linguists. He is also an accomplished artist, an author, and serious physics aficionado working on the Four Universal Motions in physics with his father.

![]()